|

|

- Search

| J. Ocean Eng. Technol. > Volume 36(1); 2022 > Article |

|

Abstract

Underwater optical images face various limitations that degrade the image quality compared with optical images taken in our atmosphere. Attenuation according to the wavelength of light and reflection by very small floating objects cause low contrast, blurry clarity, and color degradation in underwater images. We constructed an image data of the Korean sea and enhanced it by learning the characteristics of underwater images using the deep learning techniques of CycleGAN (cycle-consistent adversarial network), UGAN (underwater GAN), FUnIE-GAN (fast underwater image enhancement GAN). In addition, the underwater optical image was enhanced using the image processing technique of Image Fusion. For a quantitative performance comparison, UIQM (underwater image quality measure), which evaluates the performance of the enhancement in terms of colorfulness, sharpness, and contrast, and UCIQE (underwater color image quality evaluation), which evaluates the performance in terms of chroma, luminance, and saturation were calculated. For 100 underwater images taken in Korean seas, the average UIQMs of CycleGAN, UGAN, and FUnIE-GAN were 3.91, 3.42, and 2.66, respectively, and the average UCIQEs were measured to be 29.9, 26.77, and 22.88, respectively. The average UIQM and UCIQE of Image Fusion were 3.63 and 23.59, respectively. CycleGAN and UGAN qualitatively and quantitatively improved the image quality in various underwater environments, and FUnIE-GAN had performance differences depending on the underwater environment. Image Fusion showed good performance in terms of color correction and sharpness enhancement. It is expected that this method can be used for monitoring underwater works and the autonomous operation of unmanned vehicles by improving the visibility of underwater situations more accurately.

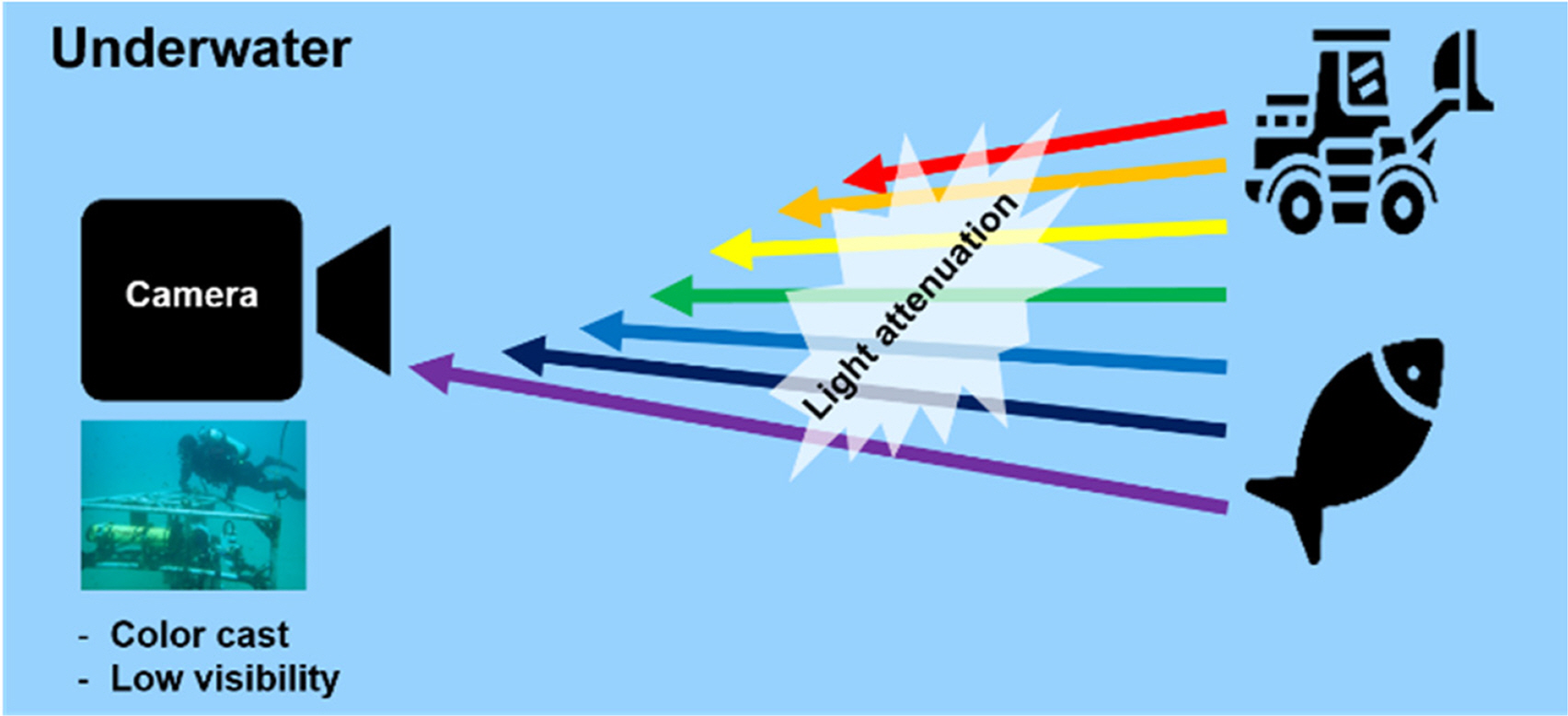

In recent years, unmanned automatic technology has been actively used in order to safely and accurately perform underwater work on behalf of humans in extreme and diversified underwater environments, and image sensing technology that visualizes underwater situations for unmanned automatic technology has become an essential element technology (Schettini and Corchs, 2010; Bharal, 2015). As shown in Fig. 1, from among underwater image sensors, images taken with underwater optical cameras have low contrast and blurred clarity due to the absorption and scattering of light attributed to water and floating matter, such as plankton and sand. The degree of deterioration in image quality varies depending on the turbidity of the water and the characteristics of the photographing camera. Moreover, unlike in an atmospheric environment, the red wavelength of light is longer than the blue or green wavelengths, which means it has more difficulties passing through the water. Therefore, optical images taken underwater tend to emphasize blue or green more than red (Mobley and Mobley, 1994).

Various algorithms have been devised to improve the hue, contrast, and clarity of underwater images. Dark channel prior (DCP) techniques using physical phenomena, in which hues are degraded differently depending on the wavelength of the light, have been applied alongside general image processing algorithms in several stages, and mathematical model-based algorithms for underwater optical imaging processes have been proposed (He et al., 2010; Li et al., 2012; Ancuti et al., 2017). Furthermore, deep learning techniques that are being successfully applied in the field of computer vision are being utilized to improve the quality of underwater images (Fabbri et al., 2018; Han et al., 2018; Uplavikar et al., 2019; Chen et al., 2019; Islam et al., 2020; Li and Cavallaro, 2020; Wang et al., 2020; Zhang et al., 2021). As the performance of deep learning techniques is critically affected by how the learning data is configured, underwater images and clean image data pairs are required to improve the quality of underwater images. However, it is extremely difficult to obtain clear images of the same scene as the underwater environment without the physical disturbance. Cycle-consistent generative adversarial networks (CycleGAN), which had been subjected to unsupervised learning before, were used to configure underwater images and clean image data pairs (Fabbri et al., 2018). It was reported that when constructing learning data including underwater images taken in Korean sea areas, the GAN technique shows a better performance in hue correction compared with other image quality improvement techniques (Kim and Kim, 2020).

In this study, the learning data were composed of an underwater ImageNet database and underwater images taken in Korean sea areas (Deng et al., 2009). CycleGAN, UGAN (Underwater GAN), and FUnIE-GAN (Fast underwater image enhancement GAN) were trained using deep learning techniques. For an evaluation of the image improvement, underwater image quality measure (UIQM), which quantitatively evaluates saturation, sharpness, and contrast in underwater images, and underwater color image quality evaluation (UCIQE), which measures the chroma, luminance, and saturation of colors were used to compare the three GAN (Generative adversarial networks) techniques and underwater image improvement techniques based on image fusion.

GAN consists of two networks: a generator (G) and a discriminator (D) (Fig. 2). Generators and discriminators learn competitively with each other, as generators have to generate fake images that are able to deceive discriminators, and discriminators have to determine the authenticity of fake images created by the generators (Goodfellow et al., 2014). In order to train GAN, the optimization problem is solved for the objective function LGAN(G,D) as outlined in Eq. (1).

In Eq. (1)z is a random input noise, and G and D are generator and discriminator functions, respectively. Based on Eq. (1)G(z) is trained to minimize the value of the objective function by generating fake images and thereby deceiving the D function, and the D function is trained to maximize the value of the objective function without being deceived by the fake images generated by G. In this study, CycleGAN, UGAN, and FUnIE-GAN were considered as deep learning-based underwater optical image improvement techniques.

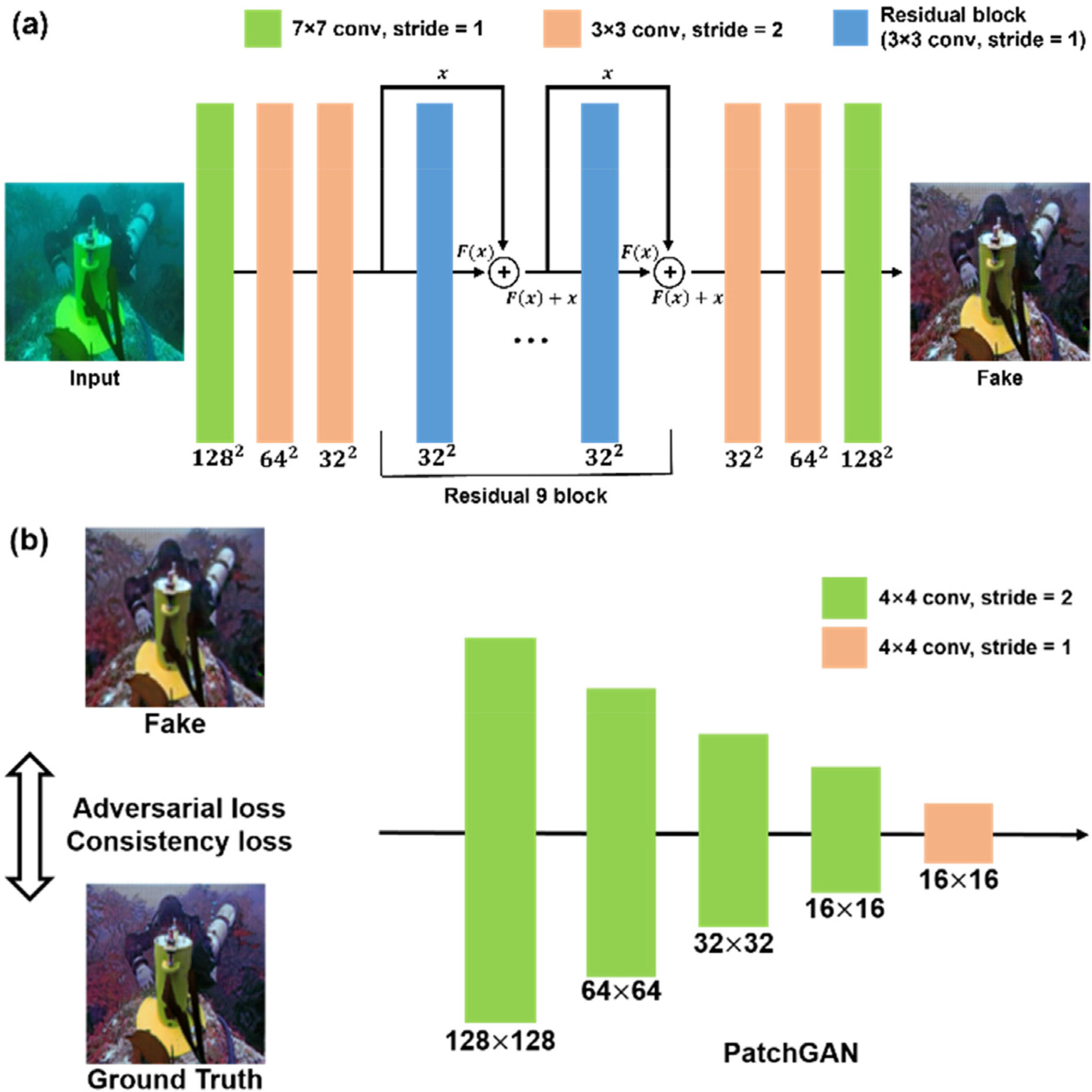

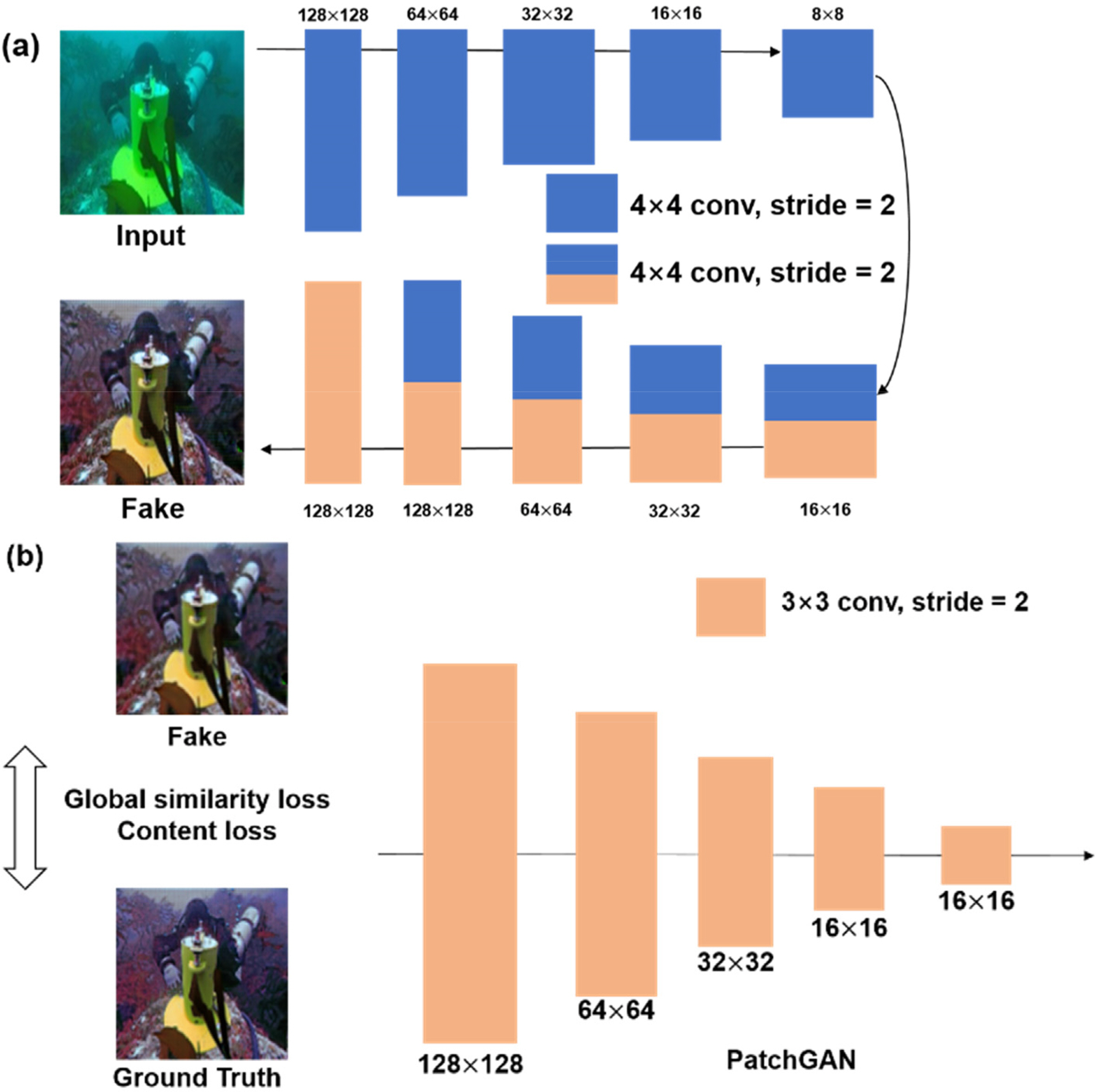

CycleGAN was designed to learn a model (GXŌåÆY, GYŌåÆX) that can conduct mutual mapping of data domains X and Y. CycleGAN was composed of a pair consisting of a generator and a discriminator in the two data domains, and each generator and discriminator network consisted of Resnet-9block (He et al., 2016) and PatchGAN with 70├Ś70 patch sizes, as shown in Figs. 3(a) and 3(b), respectively (Zhu et al., 2017).

The generator, Resnet-9block, repeatedly uses the Residual block 9 times. The network improves performance by adding the initial input value x one more time to the output, F(x) in order to learn additional information. PatchGAN, a discriminator, consists of a total of five convolutional layers. The first four convolutions were carried out with stride 2, the last convolution was calculated with stride 1, and a feature map of 16├Ś16 was finally obtained using a 70├Ś70 patch. In Eq. (2), the objective function of CycleGAN, LCycle GAN(G,D), is defined with the GAN adversarial objective function of Eq. (1) and the cycle consistency loss function Eq. (3). In Eq. (2)X and Y refer to data domains, and in Eq. (3)x and y represent data in the X and Y domains, respectively. GXŌåÆY and GYŌåÆX are generators that are converted between data domains into XŌåÆY and YŌåÆX, respectively. DX and DY are discriminators that distinguish the authenticity of the fake images generated in each data domain.

The cycle consistency loss function optimally learns the characteristics of clean images while maintaining the characteristics of original underwater images by adding the difference between the generated and input images. CycleGAN was trained by setting the batch size to 6, the learning rate to 0.0002, and the epoch to 60.

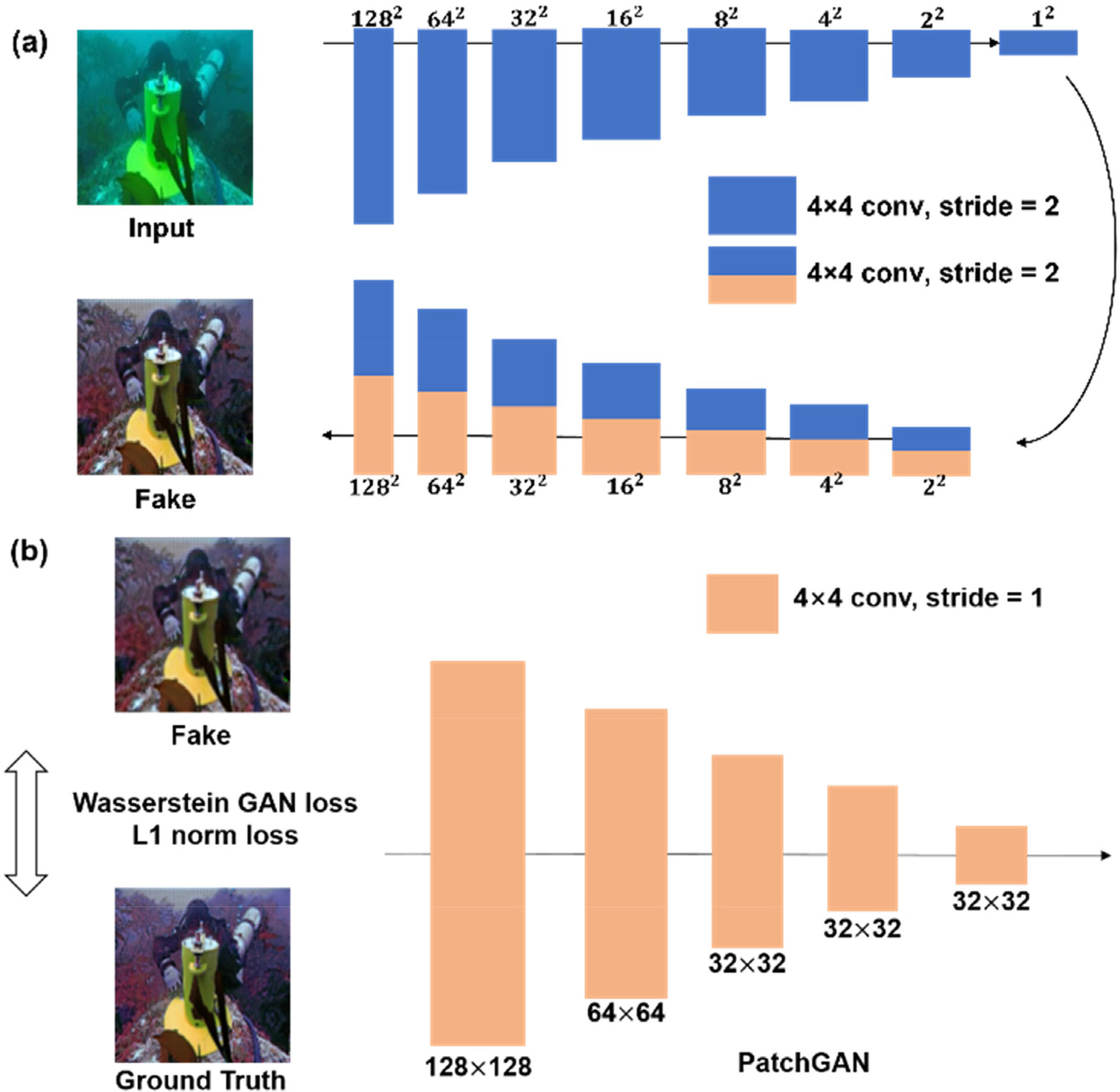

The generator and discriminator networks of UGAN were composed of U-Net and PatchGAN, respectively, as shown in Fig. 4 (Ronneberger et al., 2015; Fabbri et al., 2018). The U-Net consisted of eight encoder convolutional layers and seven decoder convolutional layers. PatchGAN consisted of five convolutional layers and distinguished fake and real images by obtaining a feature map of 32├Ś32 using a 70├Ś70 patch. The UGAN objective function of Eq. (4)LUGAN, is defined with the Wasserstein GAN objective function (Arjovsky et al., 2017) of Eq. (5) and the L1-norm loss function of Eq. (6). IGT and IUW in Eqs. (5) and (6) respectively refer to an image of the ground truth with improved distortion and an image with distortion. In Eq. (5), the generator and discriminator are taught to be hostile to each other using distorted images and the ground truth, as shown in Eq. (1). Gradient penalty, ╬╗GP, is additionally applied to stably train for minutely fluctuating gradients. In Eq. (6), the difference between an image with no distortion and an image with distortion is used as a loss function. The UGAN is trained with a batch size of 32, a learning rate of 0.0001, and an epoch of 100.

As presented in Fig. 5, the generator of FUnIE-GAN has a U-Net structure consisting of an encoder with five convolutional layers and a decoder with five convolutional layers. The discriminator is a PatchGAN consisting of five convolutional layers that provide a result feature map of 16├Ś16 using a 70├Ś70 patch (Islam et al., 2020). The FUnIE-GAN is trained with a batch size of 4, a learning rate of 0.0003, and an epoch of 100. The objective function of FUnIE-GAN in Eq. (7)LFUnIEŌłÆGAN, includes the adversarial objective function of Eq. (1), the global similarity loss of Eq. (8) which reduces blurred characteristics, and the content loss of Eq. (9) which allows for the generator to extract the characteristics of the ground truth at a high level.

UW, GT, and Z of Eqs. (8) and (9) are the distorted image, the ground truth image, and random noise, respectively. Eq. (8) improves the blurry part by using the difference in clarity between the image generated by the generator G and the ground truth image. Eq. (9) uses the ╬” function which is learned in advance using the VGG-19 network and extracts the characteristics of the image.

The characteristics of the ground truth image and the image generated by the generator are extracted using the ╬” function to provide a loss value that enables the creation of an image that is as similar as possible to the target image.

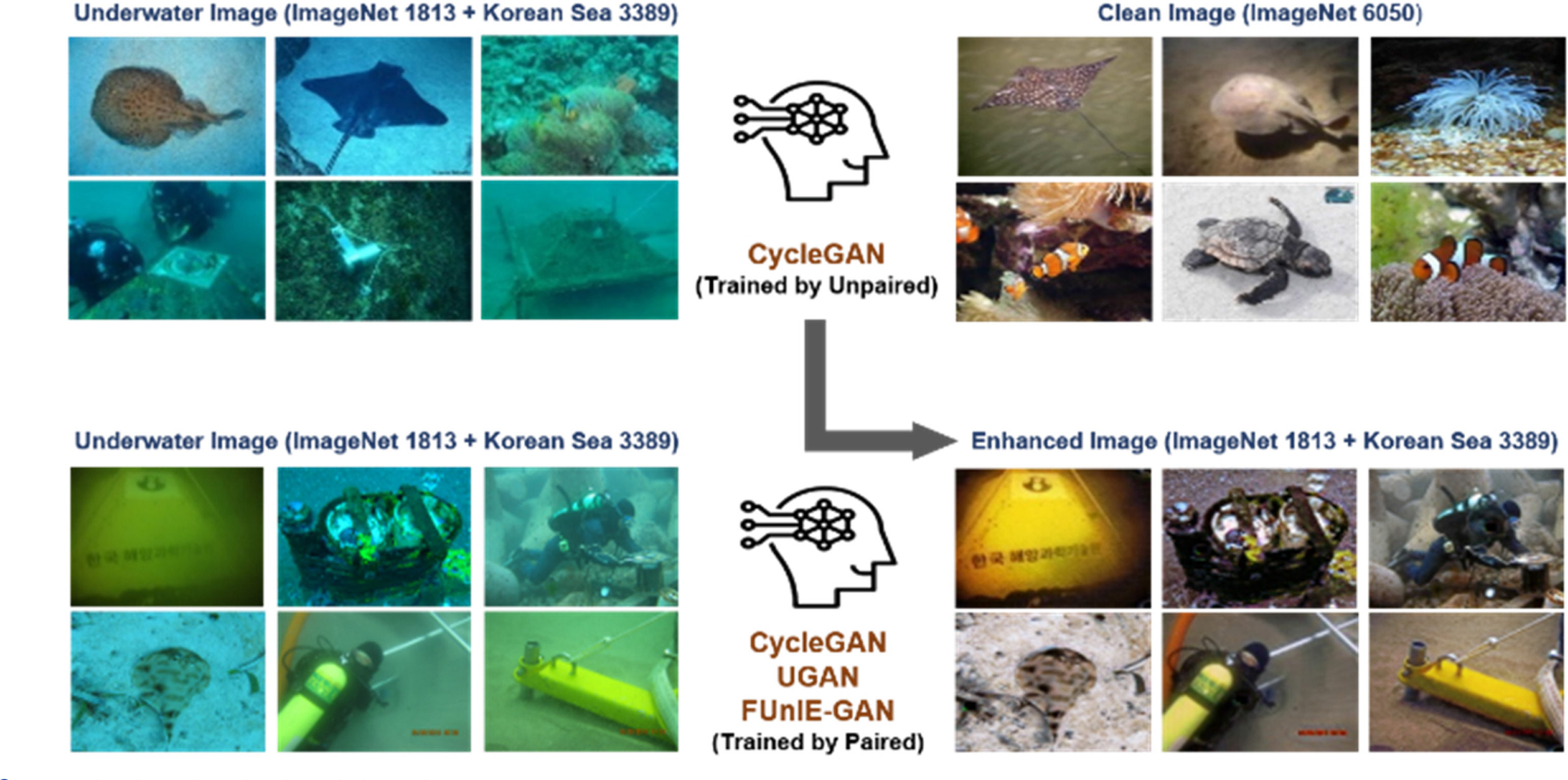

For GAN-based underwater image learning improvement techniques, two learning datasets were configured with underwater images and clean images. In the first learning dataset (unpaired), there were a total of 5,202 underwater images, consisting of 1,813 images from the ImageNet database and 3,389 underwater images directly taken in KoreaŌĆÖs waters (Geoje, Donghae, Jeju, etc.), and 6,050 clean images which had been selected from the ImageNet database (Deng et al., 2009).

First, CycleGAN was trained with unpaired datasets to generate clean images of the scenes depicted in the underwater images, and a total of 5202 second datasets (paired) were constructed (Fig. 6). CycleGAN, UGAN, and FUnIE-GAN, which were compared in this study, were trained with paired datasets. The training was carried out using Python version 3.6, Tensorflow version 2.4.1, Keras version 2.1.6, and two NVIDIA GeForce RTX 2080 Ti GPUs.

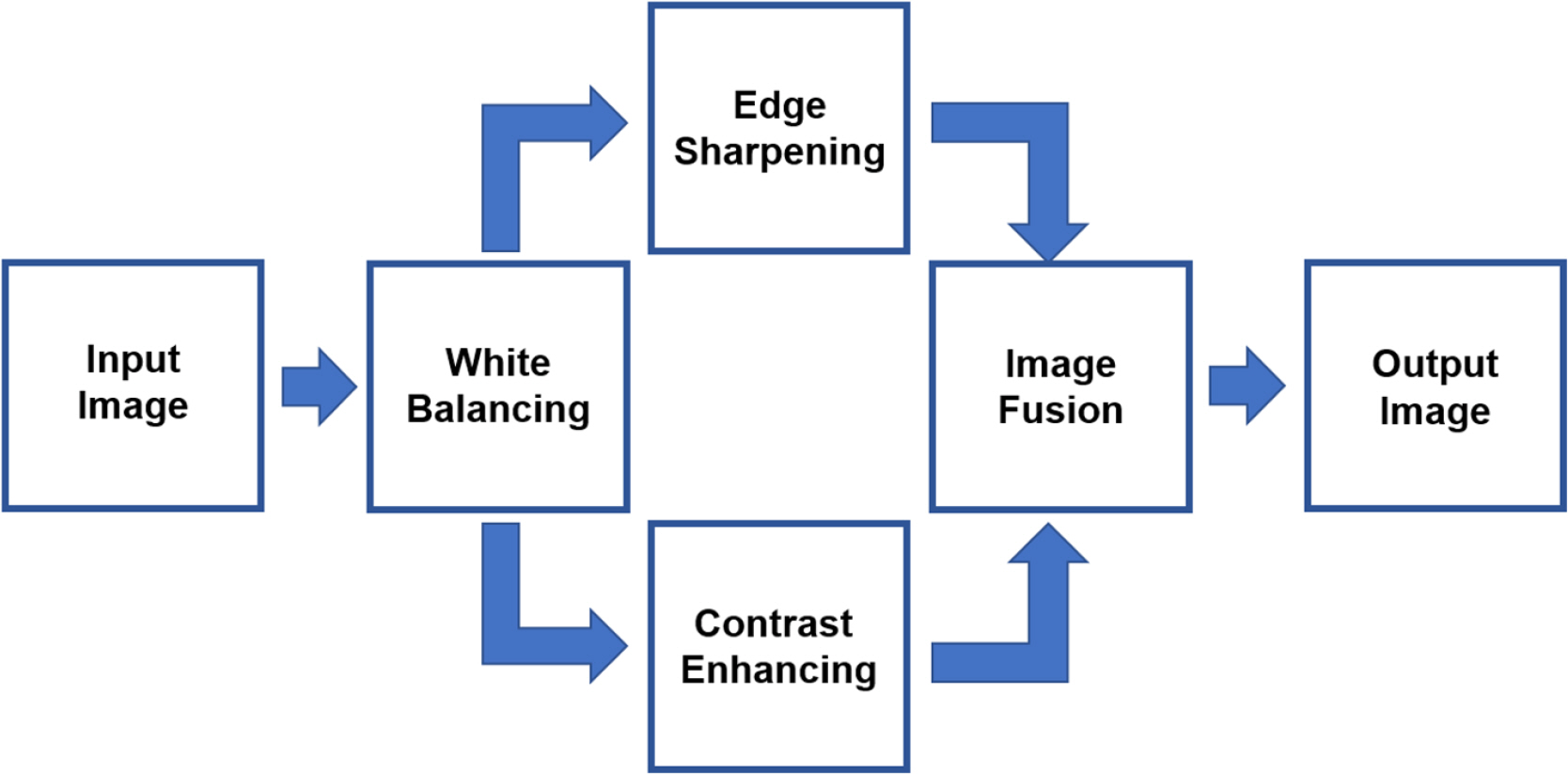

As shown in Fig. 7, the image fusion technique consists of a white balancing step for color tone correction, a step for enhancing boundaries and contrast, and a step for the fusion of two enhanced images (Ancuti et al., 2017). As the energy of green light is higher than that of red and blue light, it penetrates relatively more deeply underwater and is more likely to reach the camera. The white balancing step of the image fusion technique uses green light with a good signal transduction to compensate for the signal attenuation of red light and blue light. Eqs. (10) and (11) compensate for the red light WBr and blue light WBb of each pixel in the white balancing image, respectively. The compensation is conducted using the average values of each color tone (Ir, Ig, Ib), the color values of one pixel (Ir, Ig, Ib), and the weights ╬▒ and ╬▓.

Because images photographed in different underwater environments show different hue biases, it is necessary to set weights ╬▒ and ╬▓ of Eqs. (10) and (11) differently according to the images. In order to determine ╬▒ and ╬▓, the values of red and blue light after the white balance were replaced with the average values of the green light, which has a relatively low attenuation, and the weight values were derived as outlined in Eqs. (12) and (13).

In the second image enhancement step, the boundary signal and the contrast were enhanced, respectively. The unsharp masking principle technique in Eq. (14) for boundary enhancement applies a Gaussian filter to extract and reinforce boundary difference information with respect to the original image. WB is an image in which any color tone bias is corrected for in the white balancing step, and ╬│ and Gauss are the weight and Gaussian filter functions, respectively, which both describe the degree to which boundary difference information is applied. For enhancing the contrast, the contrast limit adaptive histogram equalization (CLAHE) method was utilized. After dividing the image into blocks of a fixed size, smoothing the histogram of each block was performed to obtain an image with enhanced contrast.

In the third image fusion step of Eq. (15), the boundary enhancement result image and the contrast enhancement result image of the second step are fused using weights to obtain the resulting final improved image (J). In Eq. (15), the weight uses Žē1 which is a value obtained by applying a Laplacian filter to the luminance channel and Žē2, which is a difference value between the hue channel and the luminance channel.

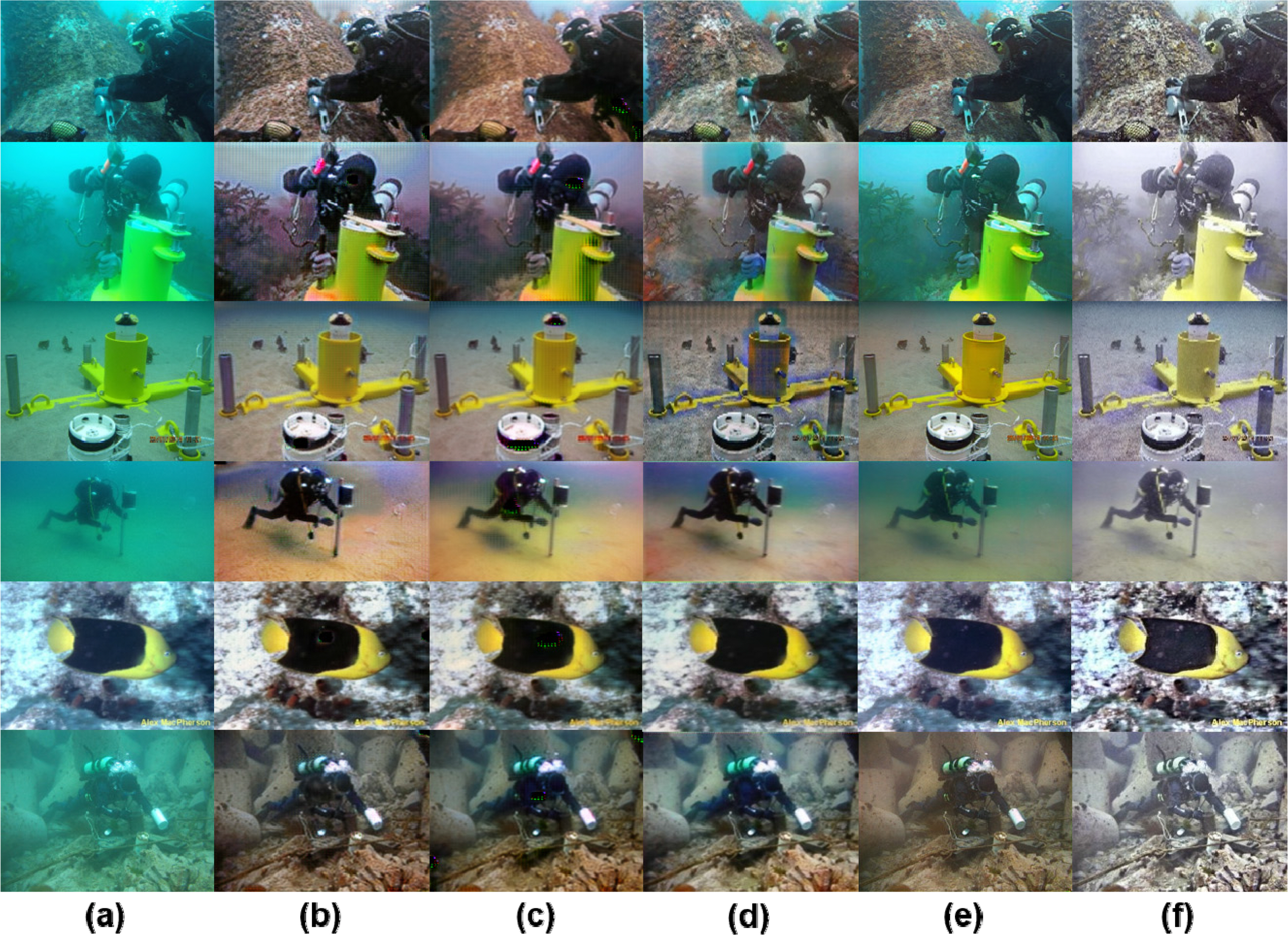

Fig. 8 qualitatively shows the results of the image fusion of underwater images taken in the real sea and learned GAN techniques. CycleGAN and UGAN showed significant improvements in image quality overall in all underwater environments; however, in some cases, it was confirmed that artifacts and noise were produced (fish body part in Fig. 8(c)). Moreover, in the case of FUnIE-GAN, its performance showed a large deviation according to the underwater environment. In terms of image fusion, it was confirmed that the hue correction was improved, but that the noise in the parts affected by reflected light could not be improved.

UIQM and UCIQE were measured to quantitatively compare the underwater optical image improvement techniques considered in this study. UIQM is an index that evaluates the quality of underwater images considering the sum of the weights of colorfulness, sharpness, and contrast, and is expressed as in Eq. (16) (Panetta et al., 2015). Three weights were estimated based on the colorfulness, sharpness, and contrast of 30 images obtained from various underwater environments and using various imaging equipment in the original reference, in which UIQM was devised, and they were set to the following values: a1=0.0282, a2=0.2983, and a3=0.0339.

UCIQE is an index calculated from the sum of the weights of the chroma, luminance, and the saturation of colors (Yang and Sowmya 2015). It is expressed as in Eq. (17), and the weights were estimated based on the chroma, luminance, and saturation of the underwater images in the original reference in which UCIQE was devised. They were set to the following values: b1=0.4680, b2=0.2745, and b1=0.2576.

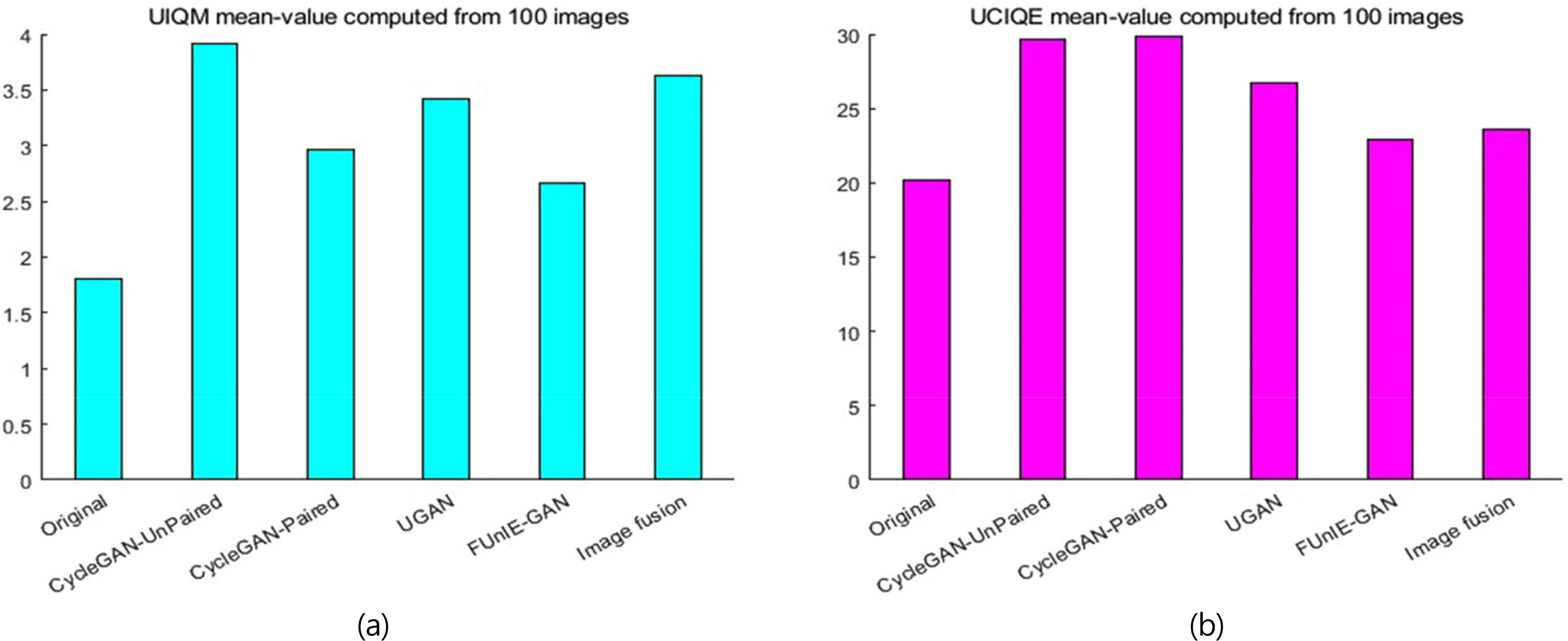

Table 1 and Fig. 9 quantitatively compare the mean values and deviations of UIQM and UCIQE of 100 underwater images from real sea areas and images improved with GAN techniques and image fusion techniques. All underwater optical image improvement techniques showed improved UIQM and UCIQE compared with underwater images from real sea areas. Among the GAN techniques, CycleGAN-UnPaired showed the best average UIQM and UCIQE. UGAN using the paired dataset and image fusion techniques showed similar performance. In the case of FUnIE-GAN, the largest deviation between UIQM and UCIQE was observed.

Table 2 compares the average calculation time of the studied underwater optical image improvement methods. In order to make a comparison under the same conditions, the size of a total of 100 underwater images used was readjusted to 256├Ś256. Among the GAN techniques, UGAN showed the fastest computational speed with a speed similar to the image fusion techniques.

In this paper, to improve the quality of underwater images, CycleGAN, UGAN, and FUnIE-GAN deep learning techniques and image fusion methods were applied to 100 underwater images taken in real sea areas, and the quality improvement was compared and evaluated based on UIQM and UCIQE. CycleGAN and UGAN showed excellent performance qualitatively and quantitatively in various underwater environments, but artifacts were generated in some cases. FUnIE-GAN had a very large performance variation depending on the underwater environment, and image fusion showed good performance in color tone correction and sharpness improvement. It is expected that an underwater situation can be more accurately visualized through the improvement of underwater images, which can then be used for monitoring underwater works and planning autonomous driving routes for unmanned vehicles.

Funding

This study was conducted with the support of the National Research Foundation of Korea (Development of Underwater Stereo Camera Stereoscopic Visualization Technology, NRF-2021R1A2C2006682) with funding from the Ministry of Science and ICT in 2021 and the Korea Institute of Marine Science & Technology Promotion (establishment of test evaluation ships and systems for the verification of the marine equipment performance in real sea areas) with funding from the Ministry of Oceans and Fisheries in 2021.

Fig.┬Ā1.

Light attenuation by water and floating particles causes low visibility with haziness and color cast in underwater optical images

Fig.┬Ā3.

Network architectures of CycleGAN: (a) generator based on Resnet-9 block and (b) discriminator based on PatchGAN.

Fig.┬Ā4.

Network architecture of UGAN: (a) generator based on U-Net and (b) discriminator based on PatchGAN.

Fig.┬Ā5.

Network architecture of FUnIE-GAN: (a) generator based on U-Net and (b) discriminator based on PatchGAN

Fig.┬Ā8.

(a) Original and enhanced underwater images by (b) CycleGAN-Unpaired, (c) CycleGAN-Paired, (d) UGAN, (e) FUnIE-GAN, (f) Image fusion

Fig.┬Ā9.

Comparative graphs of (a) mean UIQM and (b) mean UCIQE computed from 100 underwater images.

Table┬Ā1.

Comparison of UIQM and UCIQE computed from 100 underwater images and the enhanced images by GANs and Image fusion (SD: Standard deviation)

References

Ancuti, CO., Ancuti, C., De Vleeschouwer, C., & Bekaert, P. (2017). Color Balance and Fusion for Underwater Image Enhancement. IEEE Transactions on Image Processing, 27(1), 379-393.

https://doi.org/10.1109/TIP.2017.2759252

Arjovsky, M., Chintala, S., & Bottou, L. (2017). Wasserstein Generative Adversarial Networks. Proceedings of the 34th International Conference on Machine Learning, PMLR, 70, 214-223.

Bharal, S. (2015). Review of Underwater Image Enhancement Techniques. International Research Journal of Engineering and Technology, 2(3), 340-344.

Chen, X., Yu, J., Kong, S., Wu, Z., Fang, X., & Wen, L. (2019). Towards Real-Time Advancement of Underwater Visual Quality with GAN. In IEEE Transactions on Industrial Electronics, 66(12), 9350-9359.

https://doi.org/10.1109/TIE.2019.2893840

Deng, J., Dong, W., Socher, R., Li, LJ., Li, K., & Fei-Fei, L. (2009) June. Imagenet: A Large-Scale Hierarchical Image Database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition. 248-255.

https://doi.org/10.1109/CVPR.2009.5206848

Fabbri, C., Islam, MJ., & Sattar, J. (2018) May. Enhancing Underwater Imagery Using Generative Adversarial Networks. In 2018 IEEE International Conference on Robotics and Automation (ICRA). 7159-7165.

https://doi.org/10.1109/ICRA.2018-8460552

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., WardNe-Farley, D., Ozair, S., Courville, A., & Bengio, Y. (2014). Generative Adversarial Nets. Advances in Neural Information Processing Systems, 27.

Han, M., Lyu, Z., Qiu, T., & Xu, M. (2018). A Review on Intelligence Dehazing and Color Restoration for Underwater Images. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 50(5), 1820-1832.

https://doi.org/10.1109/TSMC.2017.2788902

He, K., Sun, J., & Tang, X. (2010). Single Image Haze Removal Using Dark Channel Prior. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33(12), 2341-2353.

https://doi.org/10.1109/TPAMI.2010.168

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep Residual Learning for Image Recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 770-778.

Islam, MJ., Xia, Y., & Sattar, J. (2020). Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robotics and Automation Letters, 5(2), 3227-3234.

https://doi.org/10.1109/LRA.2020.2974710

Kim, DG., & Kim, SM. (2020). Single Image-based Enhancement Techniques for Underwater Optical Imaging. Journal of Ocean Engineering and Technology, 34(6), 442-453.

https://doi.org/10.26748/KSOE.2020.030

Li, CY., & Cavallaro, A. (2020) October. Cast-Gan: Learning to Retmove Colour Cast From Underwater Images. In 2020 IEEE International Conference on Image Processing (ICIP). 1083-1087.

https://doi.org/10.1109/ICIP40778.2020.9191157

Li, WJ., Gu, B., Huang, JT., Wang, SY., & Wang, MH. (2012). Single Image Visibility Enhancement in Gradient Domain. IET Image Processing, 6(5), 589-595.

Mobley, CD., & Mobley, CD. (1994). Light and Water: Radiative Transfer in Natural Waters. Academic Press.

Panetta, K., Gao, C., & Agaian, S. (2015). Human-Visual-System- Inspired Underwater Image Quality Measures. IEEE Journal of Oceanic Engineering, 41(3), 541-551.

https://doi.org/10.1109/JOE.2015.2469915

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, Cham: p 234-241.

https://doi.org/10.1007/978-3-319-24574-4_28

Schettini, R., & Corchs, S. (2010). Underwater Image Processing: State of the Art of Restoration and Image Enhancement Methods. EURASIP Journal on Advances in Signal Processing, 2010, 1-14.

https://doi.org/10.1155/2010/746052

Uplavikar, PM., Wu, Z., & Wang, Z. (2019) May. All-in-One Underwater Image Enhancement Using Domain-Adversarial Learning. In CVPR Workshops, 1-8.

Wang, J., Li, P., Deng, J., Du, Y., Zhuang, J., Liang, P., & Liu, P. (2020). CA-GAN: Class-Condition Attention GAN for A Underwater Image Enhancement. IEEE Access, 8, 130719-130728.

https://doi.org/10.1109/ACCESS.2020.3003351

Yang, M., & Sowmya, A. (2015). An Underwater Color Image Quality Evaluation Metric. IEEE Transactions on Image Processing, 24(12), 6062-6071.

https://doi.org/10.1109/TIP.2015.2491020

Zhang, T., Li, Y., & Takahashi, S. (2021). Underwater Image Enhancement Using Improved Generative Adversarial Network. Concurrency and Computation: Practice and Experience, 33(22), e5841.

https://doi.org/10.1002/cpe.5841

- TOOLS

-

METRICS

-

- 6 Crossref

- Scopus

- 3,229 View

- 166 Download