Balas, CE., Koc, L., & Balas, L. (2004). Predictions of Missing Wave Data by Recurrent Neuronets.

Journal of Waterway, Port, Coastal, and Ocean Engineering,

130(5), 256-265.

https://doi.org/10.1061/(ASCE)0733-950X(2004)130:5(256)

Boser, BE., Guyon, I., & Vapnik, VN. (1992). A Training Algorithm for Optimal Margin Classifiers.

Proceedings of the Fifth Annual Workshop on Computational Learning Theory.

5: p 144-152 Pittsburgh. ACM:

https://doi.org/10.1145/130385.130401

Chen, J., Pillai, AC., Johanning, L., & Ashton, I. (2021). Using Machine Learning to Derive Spatial Wave Data: A Case Study for a Marine Energy Site.

Environmental Modelling & Software,

142, 105066.

https://doi.org/10.1016/j.envsoft.2021.105066

De Rouck, J., Van de Walle, B., & Geeraerts, J. (2004). Crest Level Assessment of Coastal Structures by Full Scale Monitoring, Neural Network Prediction and Hazard Analysis on Permissible Wave Overtoppingg - (CLASH). Proceedings of the EurOCEAN 2004 (European Conference on Marine Science & Ocean Technology). Galway, Ireland. EVK3-CT-2001-00058 p 261-262.

Dwarakish, GS., Rakshith, S., & Natesan, U. (2013). Review on Applications of Neural Network in Coastal Engineering. Artificial Intelligent Systems and Machine Learning, 5(7), 324-331.

Den Bieman, JP., Wilms, JM., Van den Boogaard, HFP., & Van Gent, MRA. (2020). Prediction of Mean Wave Overtopping Discharge Using Gradient Boosting Decision Trees.

Water,

12(6), 1703.

https://doi.org/10.3390/w12061703

Pullen, T., Allsop, NWH., Bruce, T., Kortenhaus, A., Sch├╝ttrumpf, H., & van der Meer, JW. (2007). European Manual for the Assessment of Wave Overtopping. In: Pullen T, Allsop NWH, Bruce T, Kortenhaus A, Sch├╝ttrumpf H, van der Meer JW, eds. HR Wallingford.

Van der Meer, JW., Allsop, NWH., Bruce, T., De Rouck, J., Kortenhaus, A., Pullen, T., & Zanuttigh, B. (2018). Manual on Wave Overtopping of Sea Defences and Related Structures: An Overtopping Manual Largely Based on European Research, but for Worldwide Application (2

nd ed.).

EurOtop. Retrieved from

http://www.overtopping-manual.com/assets/downloads/EurOtop_II_2018_Final_version.pdf

Formentin, SM., Zanuttigh, B., & van der Meer, JW. (2017). A Neural Network Tool for Predicting Wave Reflection, Overtopping and Transmission.

Coastal Engineering Journal,

59(1), 1750006-1-1750006-31.

https://doi.org/10.1142/S0578563417500061

Gandomi, M., Moharram, DP., Iman, V., & Mohammad, RN. (2020). Permeable Breakwaters Performance Modeling: A Comparative Study of Machine Learning Techniques.

Remote Sensing,

12(11), 1856.

https://doi.org/10.3390/rs12111856

Goyal, R., Singh, K., & Hegde, AV. (2014). Quarter Circular Breakwater: Prediction 3 of Transmission Using Multiple Regression 4 and Artificial Neural Network. Marine Technology Society Journal, 48(1.

Gracia, S., Olivito, J., Resano, J., Martin-del-Brio, B., Alfonso, M., & Alvarez, E. (2021). Improving Accuracy on Wave Height Estimation Through Machine Learning Techniques.

Ocean Engineering,

236, 108699.

https://doi.org/10.1016/j.oceaneng.2021.108699

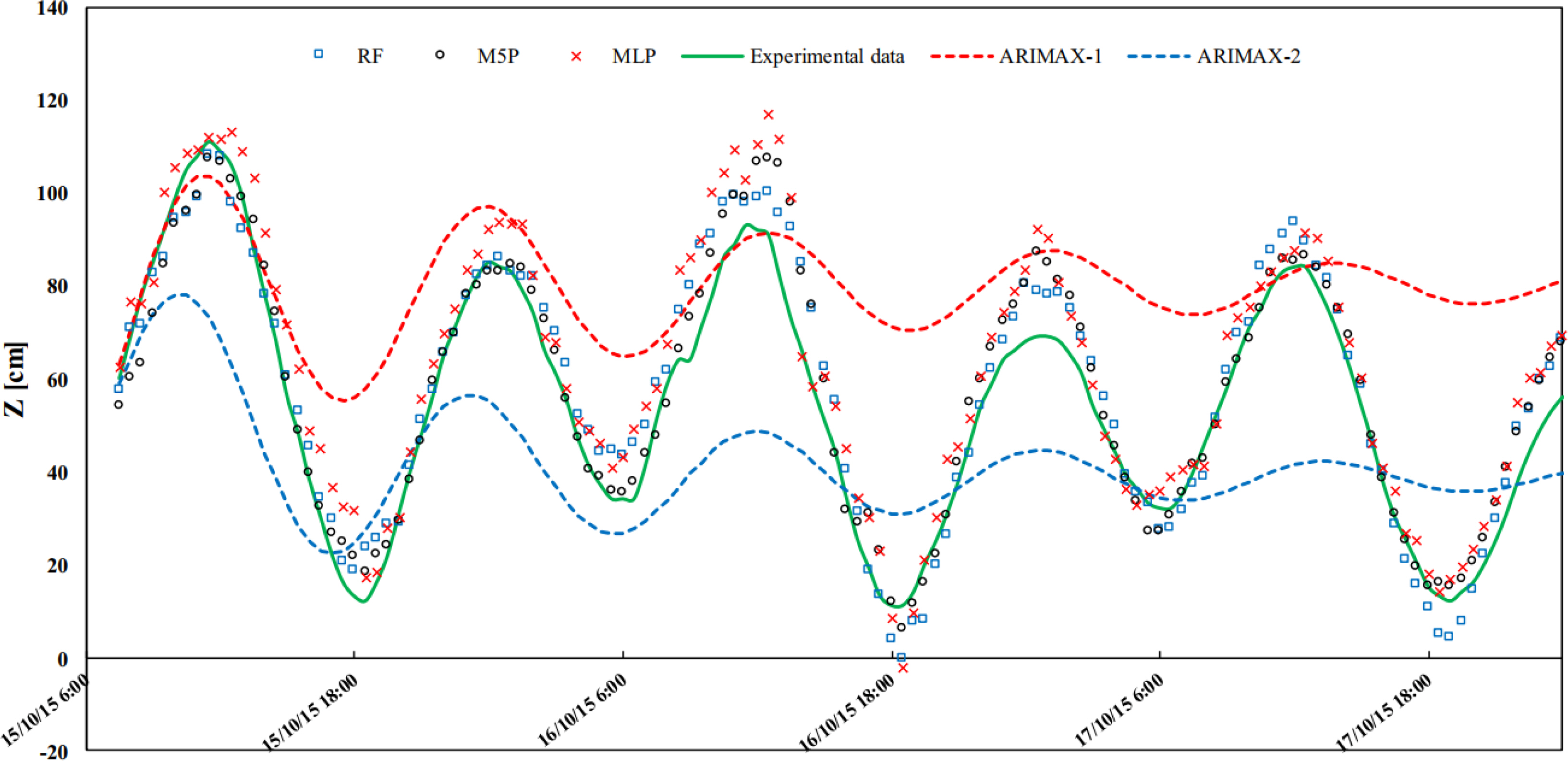

Granta, F., & Nunno, FD. (2021). Artificial Intelligence Models for Prediction of the Tide Level in Venice.

Stochastic Environmental Research and Risk Assessment,

35, 2537-2548.

https://doi.org/10.1007/s00477-021-02018-9

Hosseinzadeh, S., Etemad-Shahidi, A., & Koosheh, A. (2021). Prediction of Mean Wave Overtopping at Simple Sloped Breakwaters Using Kernel-based Methods.

Journal of Hydroinformatics,

23(5), 1030-1049.

https://doi.org/10.2166/hydro.2021.046

Kang, DH., & Oh, SJ. (2019). A Study of Machine Learning Model for Prediction of Swelling Waves Occurrence on East Sea.

Journal of Korean Institute of Information Technology,

17(9), 11-17.

https://doi.org/10.14801/jkiit.2019.17.9.11

Kankal, M., & Yuksek, O. (2012). Artificial Neural Network Approach for Assessing Harbor Tranquility: The Case of Trabzon Yacht Harbor, Turkey.

Applied Ocean Research,

38, 23-31.

https://doi.org/10.1016/j.apor.2012.05.009

Kim, DH., Kim, YJ., Hur, DS., Jeon, HS., & Lee, CH. (2010). Calculating Expected Damage of Breakwater Using Artificial Neural Network for Wave Height Calculation. Journal of Korean Society of Coastal and Ocean Engineers, 22(2), 126-132.

Kim, HI., & Kim, BH. (2020). Analysis of Major Rainfall Factors Affecting Inundation Based on Observed Rainfall and Random Forest.

Journal of the Korean Society of Hazard Mitigation,

20(6), 301-310.

https://doi.org/10.9798/KOSHAM.2020.20.6.301

Kim, T., Kwon, S., & Kwon, Y. (2021). Prediction of Wave Transmission Characteristics of Low-Crested Structures with Comprehensive Analysis of Machine Learning.

Sensors,

21(24), 8192.

https://doi.org/10.3390/s21248192

Kim, YE., Lee, KE., & Kim, GS. (2020). Forecast of Drought Index Using Decision Tree Based Methods.

Journal of the Korean Data & Information Science Society,

31(2), 273-288.

https://doi.org/10.7465/jkdi.2020.31.2.273

Koo, MH., Park, EG., Jeong, J., Lee, HM., Kim, HG., Kwon, MJ., & Jo, SB. (2016). Applications of Gaussian Process Regression to Groundwater Quality Data.

Journal of Soil and Groundwater Environment,

21(6), 67-79.

https://doi.org/10.7857/JSGE.2016.21.6.067

Kuntoji, G., Manu, R., & Subba, R. (2020). Prediction of Wave Transmission over Submerged Reef of Tandem Greakwater Using PSO-SVM and PSO-ANN Techniques.

ISH Journal of Hydraulic Engineering,

26(3), 283-290.

https://doi.org/10.1080/09715010.2018.1482796

Lee, GH., Kim, TG., & Kim, DS. (2020). Prediction of Wave Breaking Using Machine Learning Open Source Platform.

Journal of Korean Society Coastal and Ocean Engineers,

32(4), 262-272.

https://doi.org/10.9765/KSCOE.2020.32.4.262

Lee, JS., & Suh, KD. (2020). Development of Stability Formulas for Rock Armor and Tetrapods Using Multigene Genetic Programming.

Journal of Waterway, Port, Coastal, and Ocean Engineering,

146(1), 04019027.

https://doi.org/10.1061/(ASCE)WW.1943-5460.0000540

Lee, SB., & Suh, KD. (2019). Development of Wave Overtopping Formulas for Inclined Seawalls using GMDH Algorithm.

KSCE Journal of Civil Engineering,

23, 1899-1910.

https://doi.org/10.1007/s12205-019-1298-1

Li, B., Yin, J., Zhang, A., & Zhang, Z. (2018). A Precise Tidal Level Prediction Method Using Improved Extreme Learning Machine with Sliding Data Window.

In 2018 37th Chinese Control Conference (CCC), 1787-1792.

http://doi.org/10.23919/ChiCC.2018.8482902

Mahjoobi, J., Etemad-Shahidi, A., & Kazeminezhad, MH. (2008). Hindcasting of Wave Parameters Using Different Soft Computing Methods.

Applied Ocean Research,

30(1), 28-36.

https://doi.org/10.1016/j.apor.2008.03.002

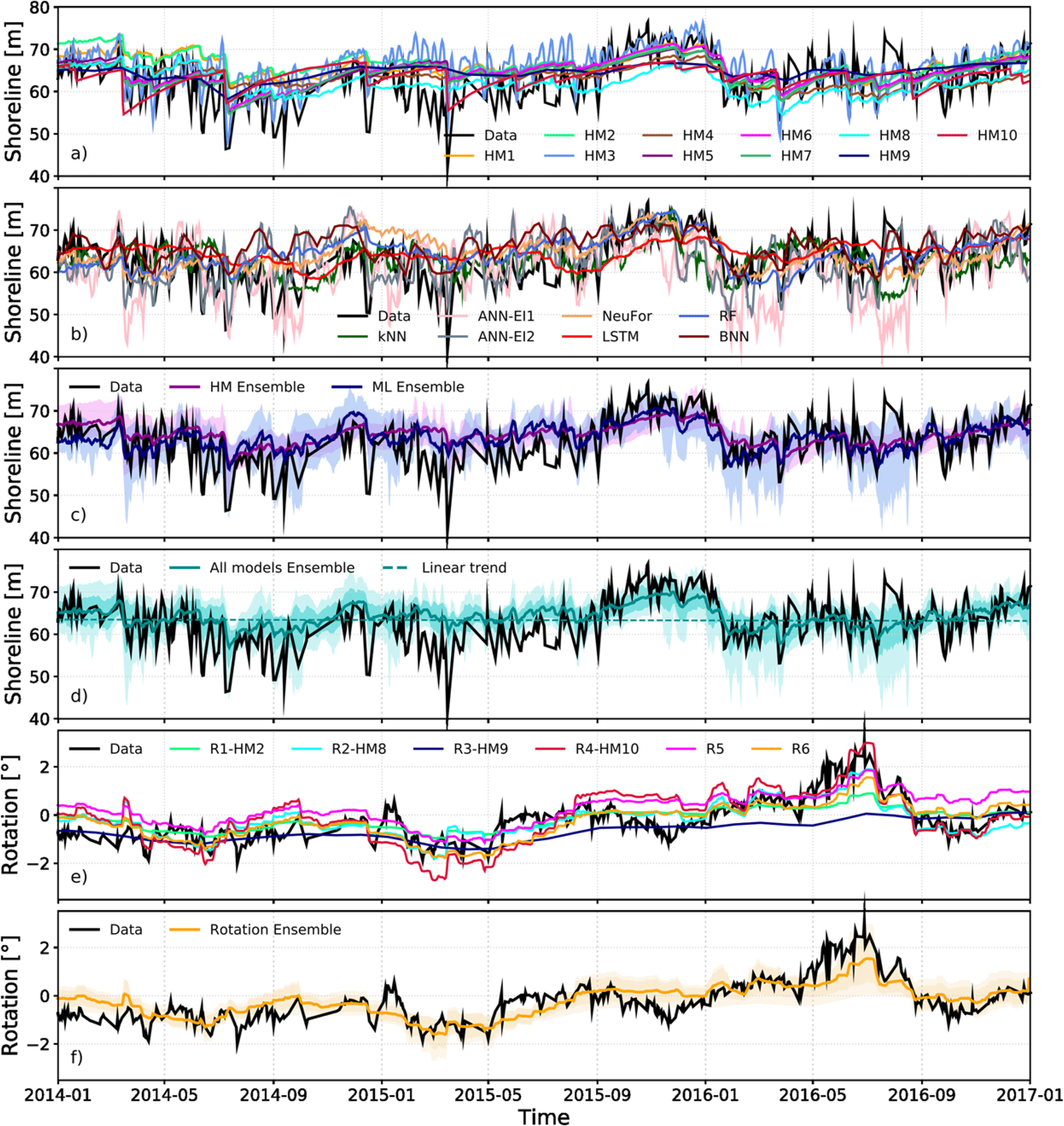

Monta├▒o, J., Coco, G., Antol├Łnez, JAA., Beuzen, T., Bryan, KR., Cagigal, L., & Vos, K. (2020). Blind Testing of Shoreline Evolution Models.

Scientific Report,

10, 2137.

https://doi.org/10.1038/s41598-020-59018-y

Na, YY., Park, JG., & Moon, IC. (2017). Analysis of Approval Ratings of Presidential Candidates Using Multidimensional Gaussian Process and Time Series Text Data. Proceedings of the Korean Operations Research And Management Society, Yeosu. 1151-1156.

Park, JS., Ahn, KM., Oh, CY., & Chang, YS. (2020). Estimation of Significant Wave Heights from X-Band Radar Using Artificial Neural Network.

Journal of Korean Society Coastal and Ocean Engineers,

32(6), 561-568.

https://doi.org/10.9765/KSCOE.2020.32.6.561

Passarella, M., Goldstein, EB., De Muro, S., & Coco, G. (2018). The Use of Genetic Programming to Develop a Predictor of Swash Excursion on Sandy Beaches.

Natural Hazards and Earth System Sciences,

18, 599-611.

https://doi.org/10.5194/nhess-18-599-2018

Rigos, A., Tsekouras, GE., Chatzipavlis, A., & Velegrakis, AF. (2016). Modeling Beach Rotation Using a Novel Legendre Polynomial Feedforward Neural Network Trained by Nonlinear Constrained Optimization. In: Iliadis L, Maglogiannis I, eds.

Artificial Intelligence Applications and Innovations. AIAI 2016, IFIP Advances in Information and Communication Technology:

475: p 167-179.

Shahabi, S., Khanjani, M., & Kermani, MH. (2016). Significant Wave Height Forecasting Using GMDH Model.

International Journal of Computer Applications,

133(6), 13-16.

Shamshirband, S., Mosavi, A., Rabczuk, T., Nabipour, N., & Chau, KW. (2020). Prediction of Significant Wave Height; Comparison Between Nested Grid Numerical Model, and Machine Learning Models of Artificial Neural Networks, Extreme Learning and Support Vector Machines.

Engineering Applications of Computational Fluid Mechanics,

14(1), 805-817.

https://doi.org/10.1080/19942060.2020.1773932

Van der Meer, JW. (1988). Rock Slopes and Gravel Beaches under Wave Attack (Ph.D. thesis). Delft University of Technology, Delft Hydraulics Report; 396.

Wilson, KE., Adams, PN., Hapke, CJ., Lentz, EE., & Brenner, O. (2015). Application of Bayesian Networks to Hindcast Barrier Island Morphodynamics.

Coastal Engineering,

102, 30-43.

https://doi.org/10.1016/j.coastaleng.2015.04.006

Zanuttigh, B., Formentin, SM., & Van der Meer, JW. (2014). Advances in Modelling Wave- structure Interaction Tthrough Artificial Neural Networks. Coastal Engineering Proceedings, 1(34), 693.

Zanuttigh, B., Formentin, SM., & Van der Meer, JW. (2016). Prediction of Extreme and Tolerable Wave Overtopping Discharges Thorough an Advanced Neural Network.

Ocean Engineering,

127, 7-22.